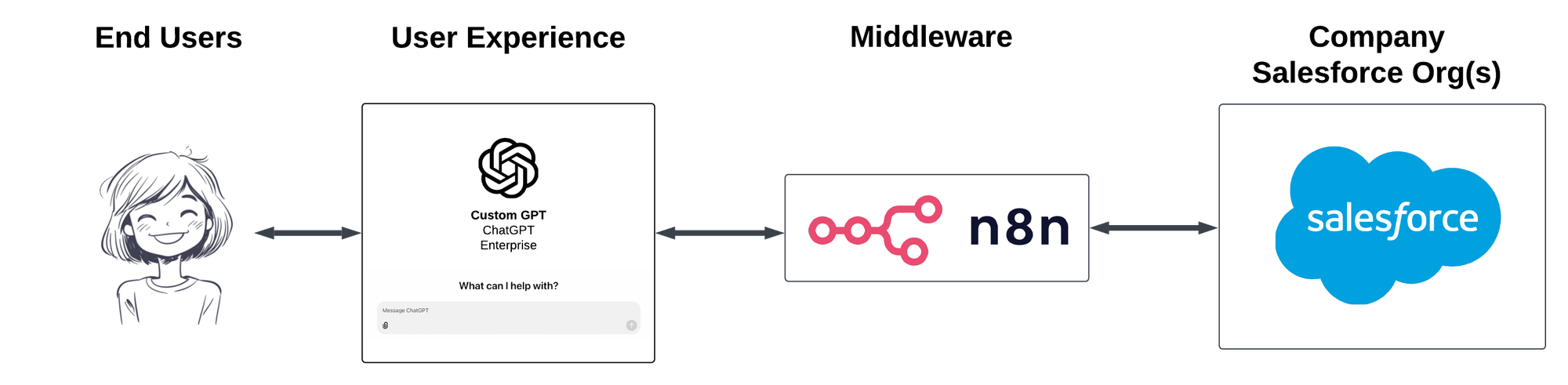

A growing number of organizations want to leverage their investment in Enterprise LLM systems with their Salesforce data but are unsure how to integrate them in a secure, maintainable way. This is a simple example of creating a Custom GPT Action that can return Account and Opportunity information from a Salesforce Org.

This article is intended to be informative for IT teams who are being asked to integrate their Enterprise ChatGPT user experience with backend systems such as Salesforce and who want to do this in a maintainable and scalable way with as little code as possible.

Want help connecting ChatGPT Enterprise with Salesforce and other systems? Our team at Altimetrik can help - Connect with me here to learn how!

In this example, I'm using the low code / no code automation service n8n.io as a middleware between ChatGPT and Salesforce. I've chosen to use n8n for a few reasons that apply especially well to enterprise use cases:

- Hosting Options: You can self-host it or choose a cloud-hosted option. This is a good option for organizations where privacy and/or security policies limit infrastructure options. It is also good for teams without their own infrastructure support, where the cloud-hosted option is a quick win.

- Maintainability: my goal is to demonstrate a low-code / no-code solution that doesn't require a lot of engineering overhead to maintain or release new features over time.

- Efficiency and Scalability: this relatively simple solution is easy to deploy, upgrade, scale up, and integrate with additional systems as needed.

A note on Security and Trust:

You might ask, "But what about the Salesforce Trust Layer?" And that's a good question. Salesforce's approach to Trust with Agentforce/Einstein is to mask customer data to ensure that company data from Salesforce isn't sent to the LLM driving the Generative AI functionality. That approach stems mainly from early concerns that LLM providers were training the models on user data. Salesforce is also being careful to follow its own Trust & Compliance documentation regarding how it shares data with its own sub-processors, but that doesn't necessarily limit how an organization chooses to use its own data.

LLM providers have evolved and now offer paid enterprise-oriented plans that don't train models on user data. I've found that our enterprise clients are comfortable with these options when using their own paid enterprise plans, whether that be with OpenAI, Microsoft, AWS, Google, Anthropic, or others.

An important assumption in this example is that an organization has undergone or will undergo security and privacy reviews of these technologies before use. As such, evaluating the security and privacy policies of each of these technologies is left as an exercise for the reader and their IT or and InfoSec teams:

OpenAI offers a ChatGPT Enterprise license with enterprise-oriented security policies and controls. Read more about OpenAI security and privacy policies here:

n8n documents their Security and Privacy policies here. As noted, n8n can be used as a cloud-hosted or self-hosted option:

Salesforce documents their Security and privacy policies at trust.salesforce.com:

Overview of the Solution

This solution assumes every user has a license of ChatGPT Enteprise. They will be using a Custom GPT deployed within that ChatGPT Enterprise account. It also requires one Salesforce user license to integrate Salesforce and n8n (this system is not designed to log into Salesforce with the end-user's credentials). I recommend carefully limiting the access required for this Salesforce integration user from a least privileged perspective, but I'm not going into the details of setting up an integration user account here.

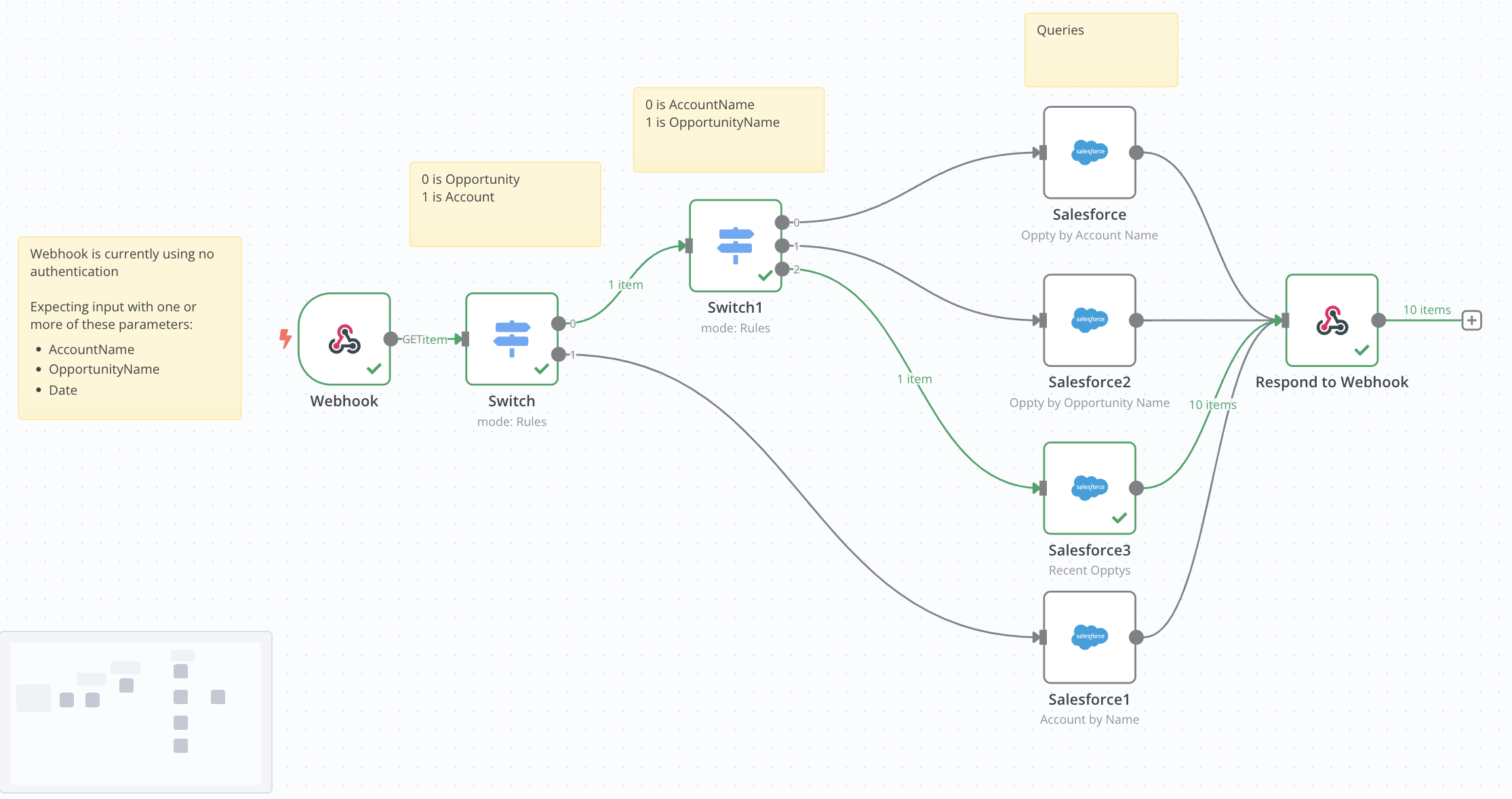

In n8n, we will create a Webhook interface for our ChatGPT Custom GPT to call. It will look like this. My goal here is to create a simple branching logic that allows the user to take these pre-defined actions on their Salesforce data:

Query an Opportunity by Name

- Query Opportunities by Account Name

- Query for Recent Opportunities

- Query an Account by Name

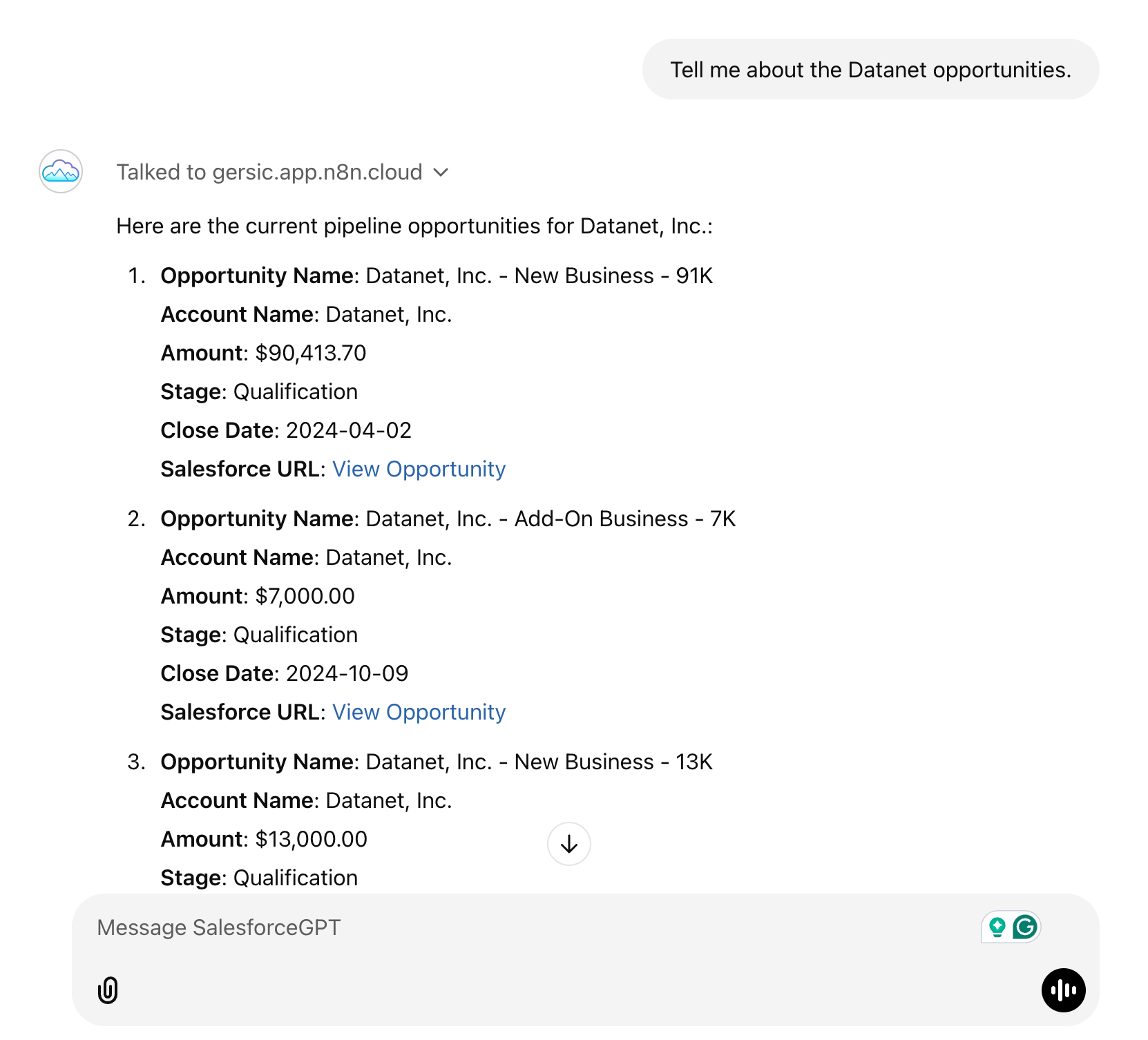

Here's how it works from the Custom GPT itself. Just FYI in case you're wondering, the data in these examples are from a Salesforce Demo Org (SDO), so it is all dummy data.

Step-by-Step Setup in n8n

The section will detail the setup in n8n step by step from left to right in the flow shown above.

Step 1: The Webhook

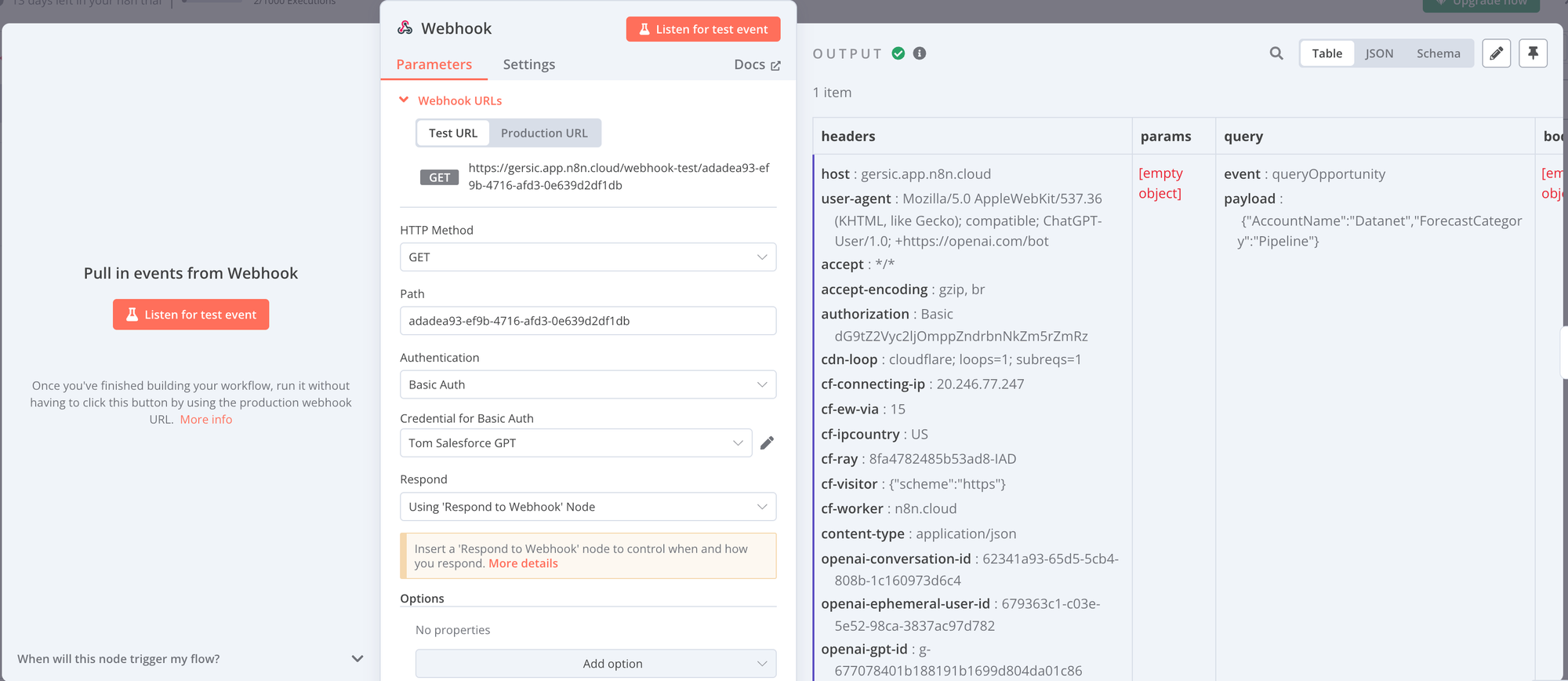

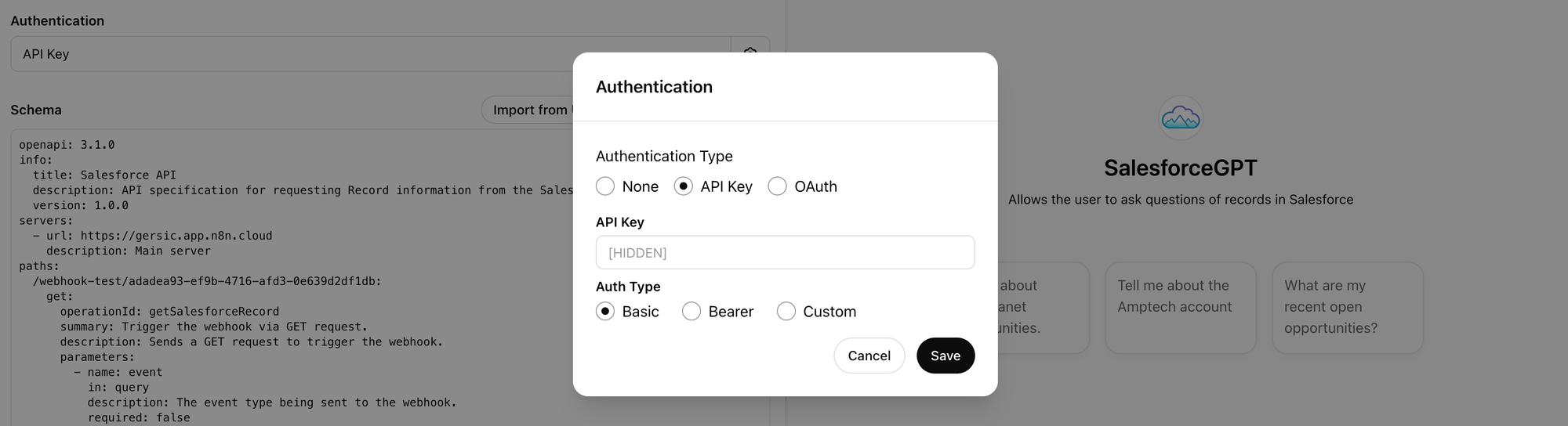

The Webhook, in this instance, is pretty simple. The main configuration option is the authentication mechanism between the Custom GPT and the Webhook on n8n. In this example, I've used Basic Auth.

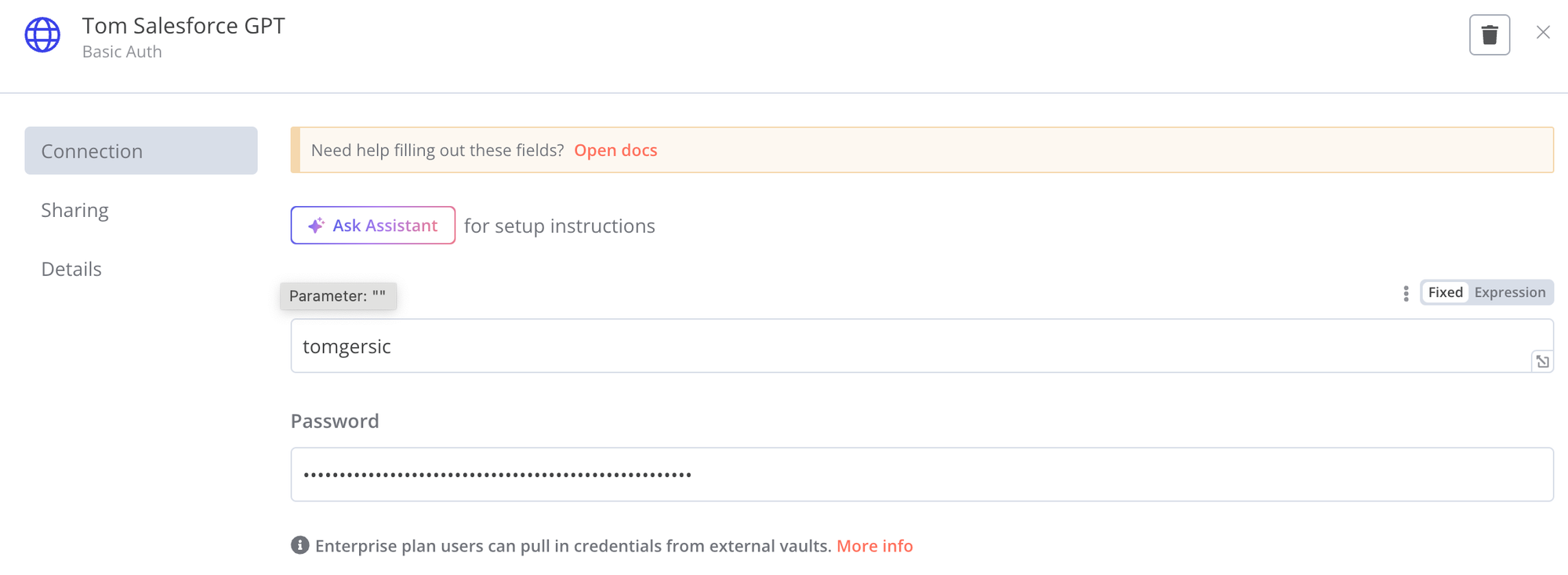

You will enter a username and password on the n8n side of this authentication.

I'll go through this in more detail later, but on the ChatGPT side of things, you can then use API Key, Basic Auth. To get the API key, you need to combine the username and password and then encode that string as Base64. Here's an example:

Assuming this Username and Password for n8n:

- Username: tomgersic

- Password: mypassword

Follow these steps for ChatGPT:

- Combine username and password into a single string like this: tomgersic:mypassword

- While you could Google for a website that can create a Base64 representation of this string, you can also ask ChatGPT to Base64 encode this string for you. This is what I get: dG9tZ2Vyc2ljOm15cGFzc3dvcmQ=

- Enter that Base64 encoded string as your API Key. If you don't know where to do that, that's cool, just hang out for a bit and we'll get to the ChatGPT setup side of things soon.

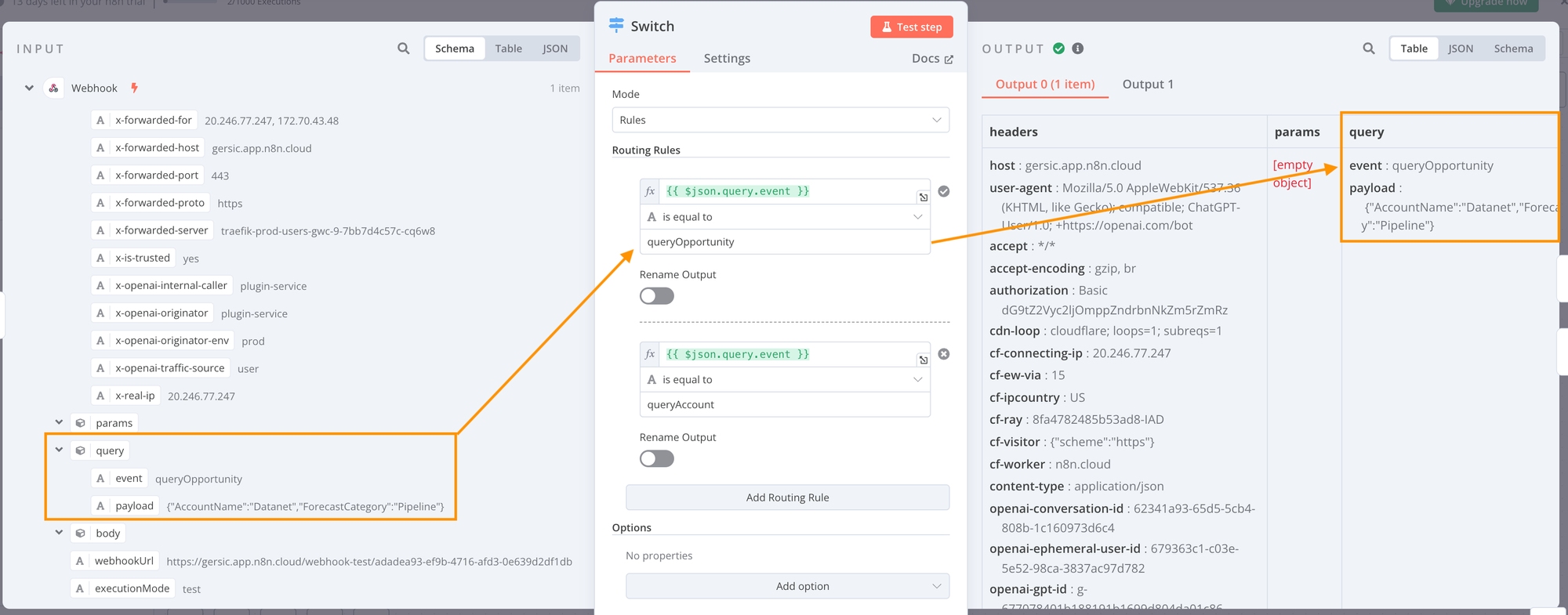

Step 2: Switches

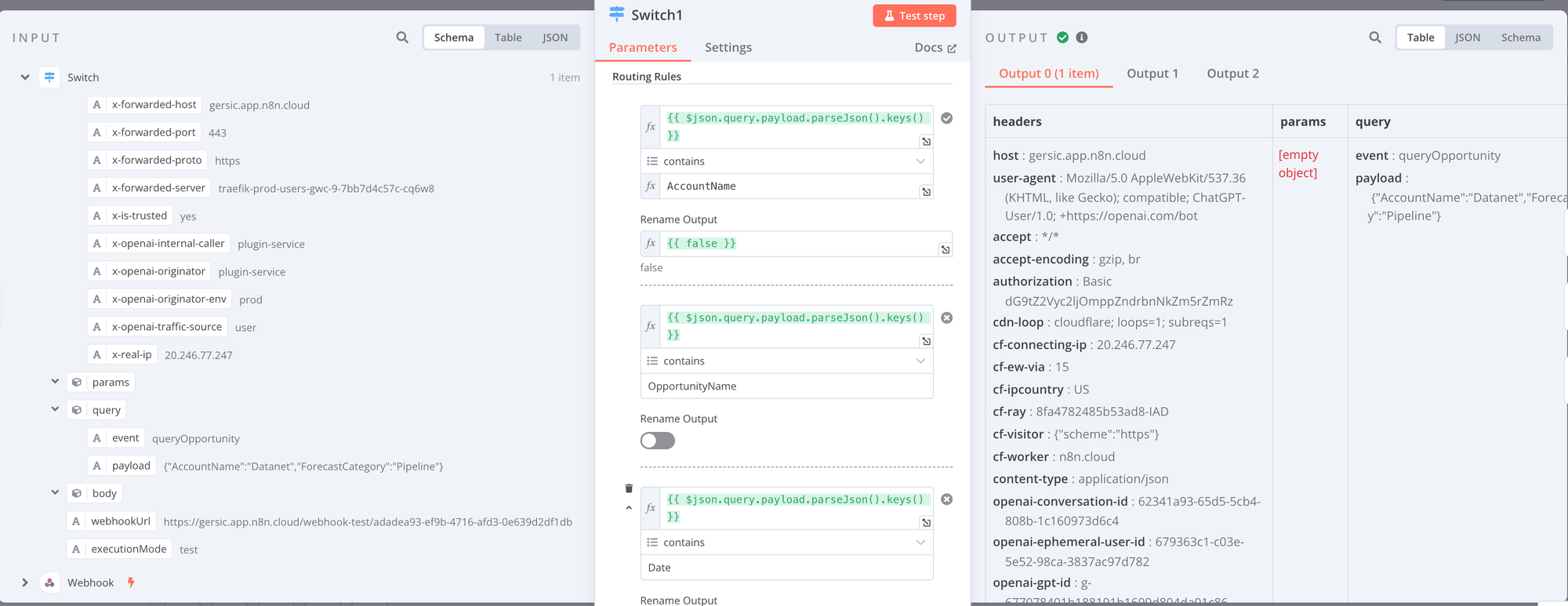

We're setting up a pretty simple branching logic that n8n will use to determine which SOQL query to use with Salesforce. At the first step, we'll just determine if the goal is to query the Account object or the Opportunity object. In this example, you can see the Custom GPT sent a queryOpportunity event

The second Switch allows for Opportunities to be queried by Account Name, Opportunity Name, or by Date / Recent Opportunities. In this case, the Account Name "Datanet" was specified, so the following step will be to create a SOQL query on the Opportunity Object by Account Name.

Step 3: Salesforce Nodes

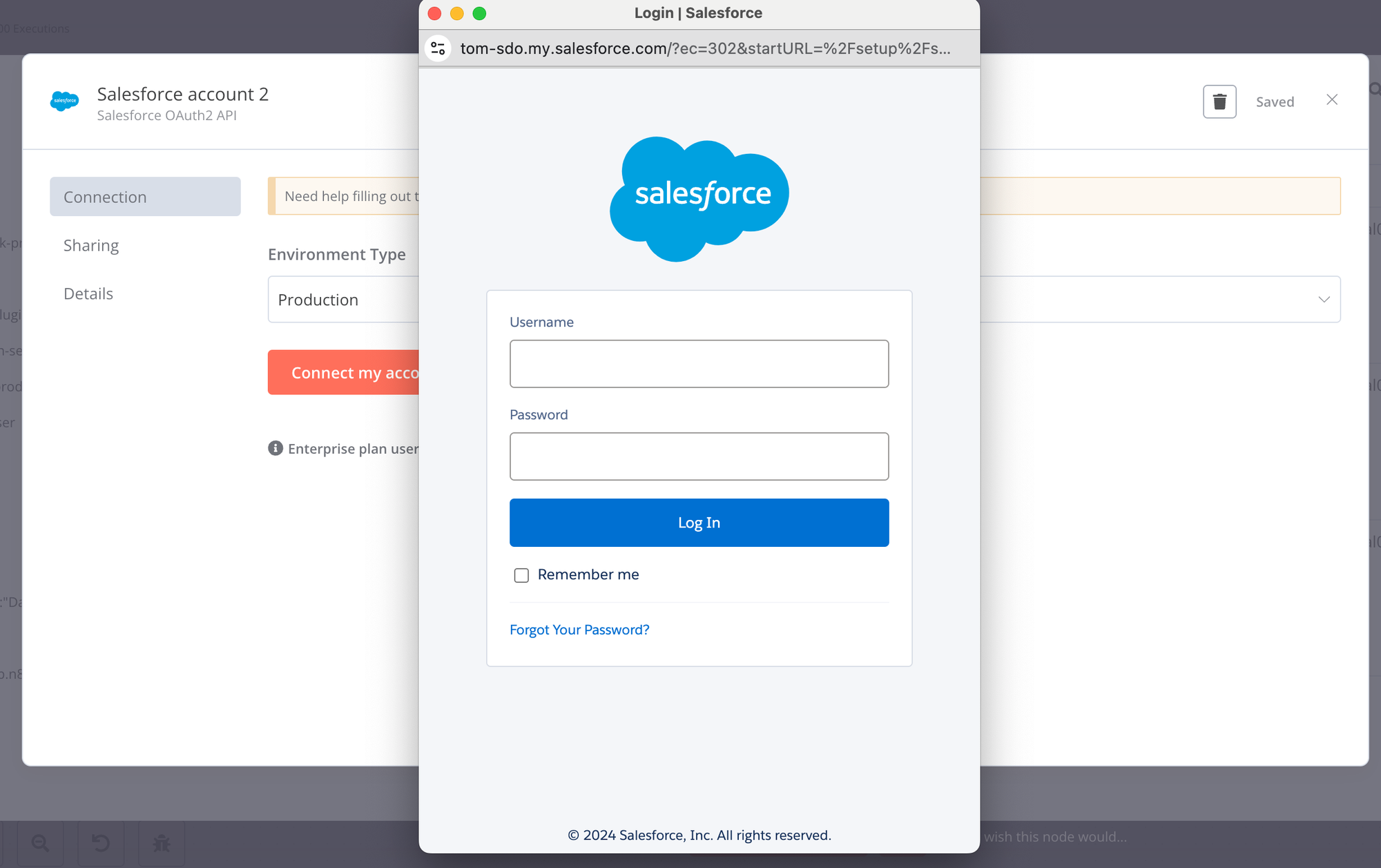

As someone who has written Oauth2 client functionality in Objective-C back when Salesforce first implemented Oauth2 but before they released the Salesforce Mobile SDK, I can tell you that it is super finicky and not fun at all. One massive benefit of using n8n is that they have implemented all the Oauth2 stuff for you:

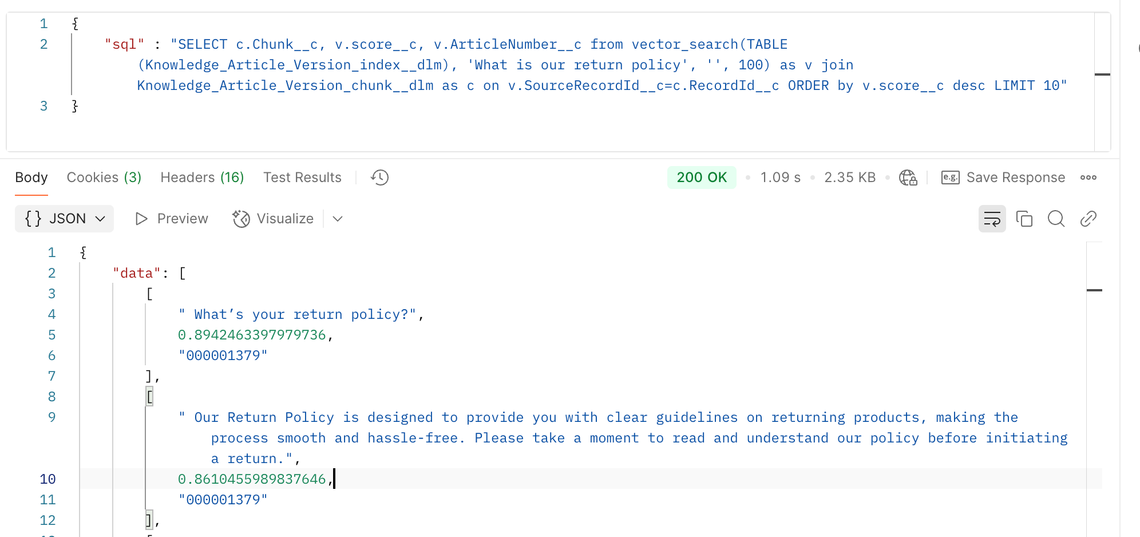

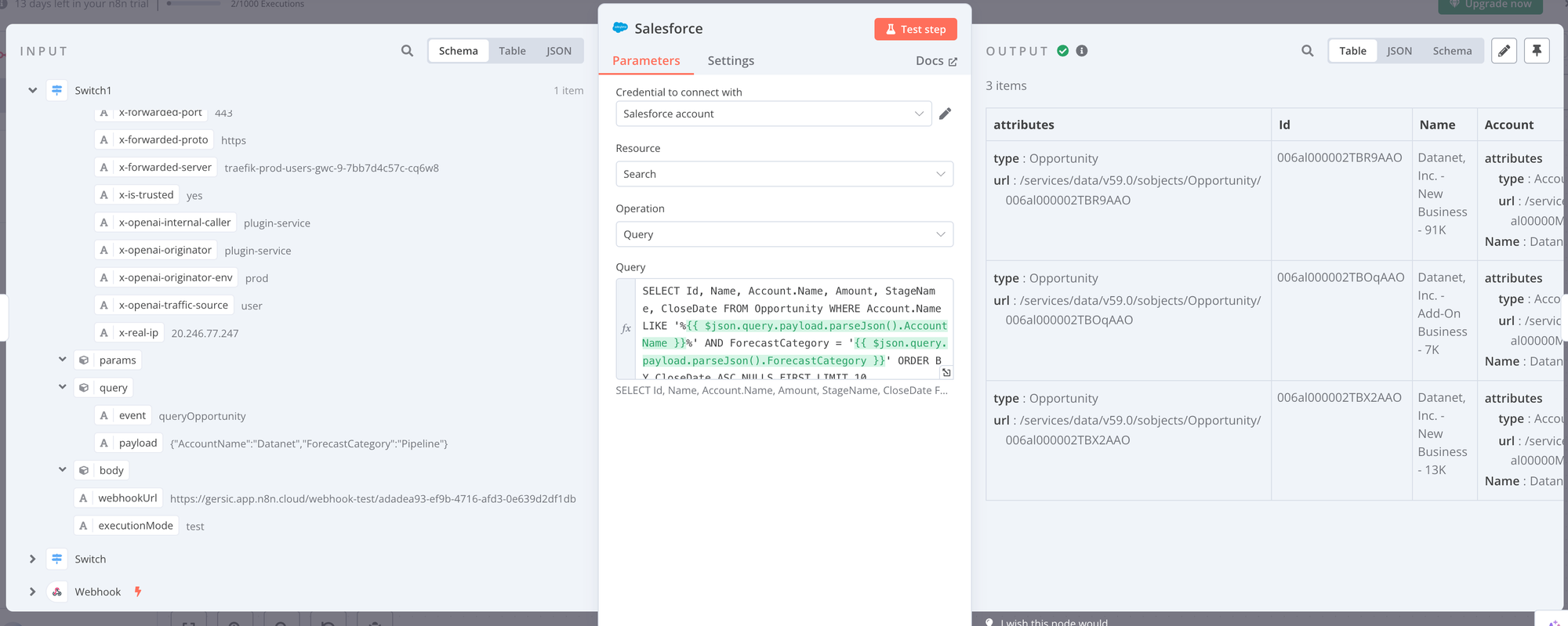

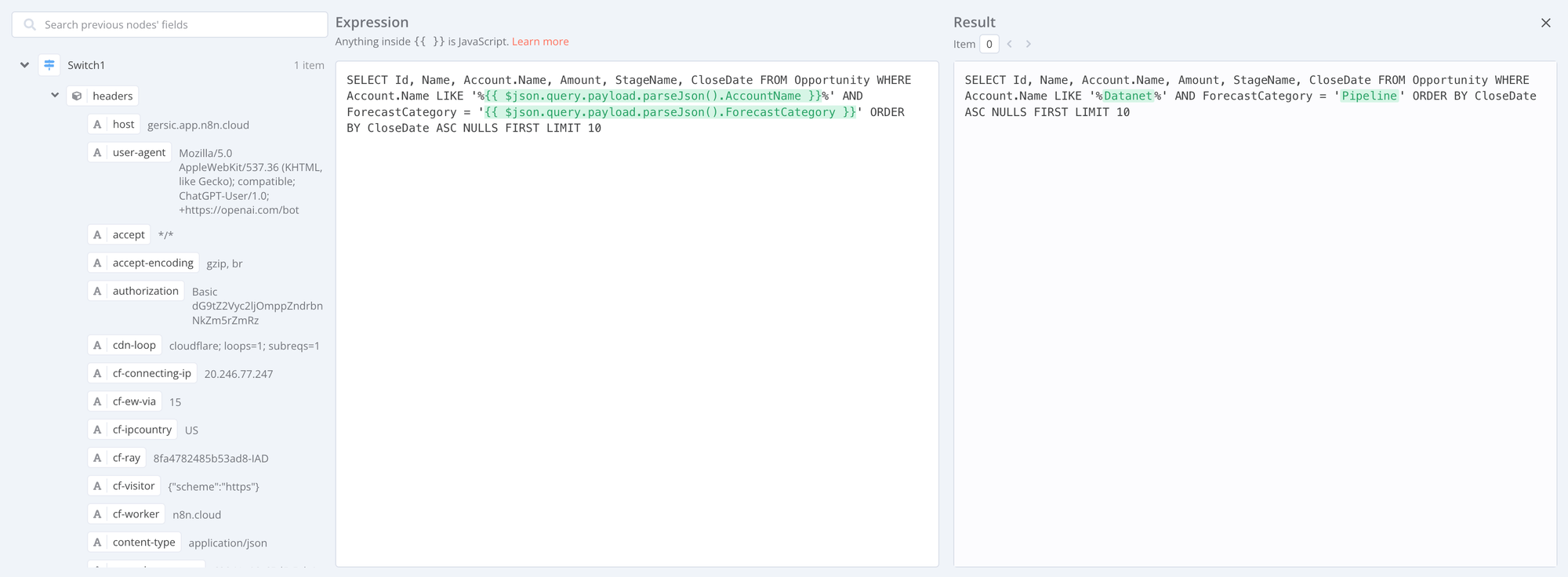

Once you have authenticated, you can create a SOQL query to get the results back to ChatGPT:

Here's the full query itself:

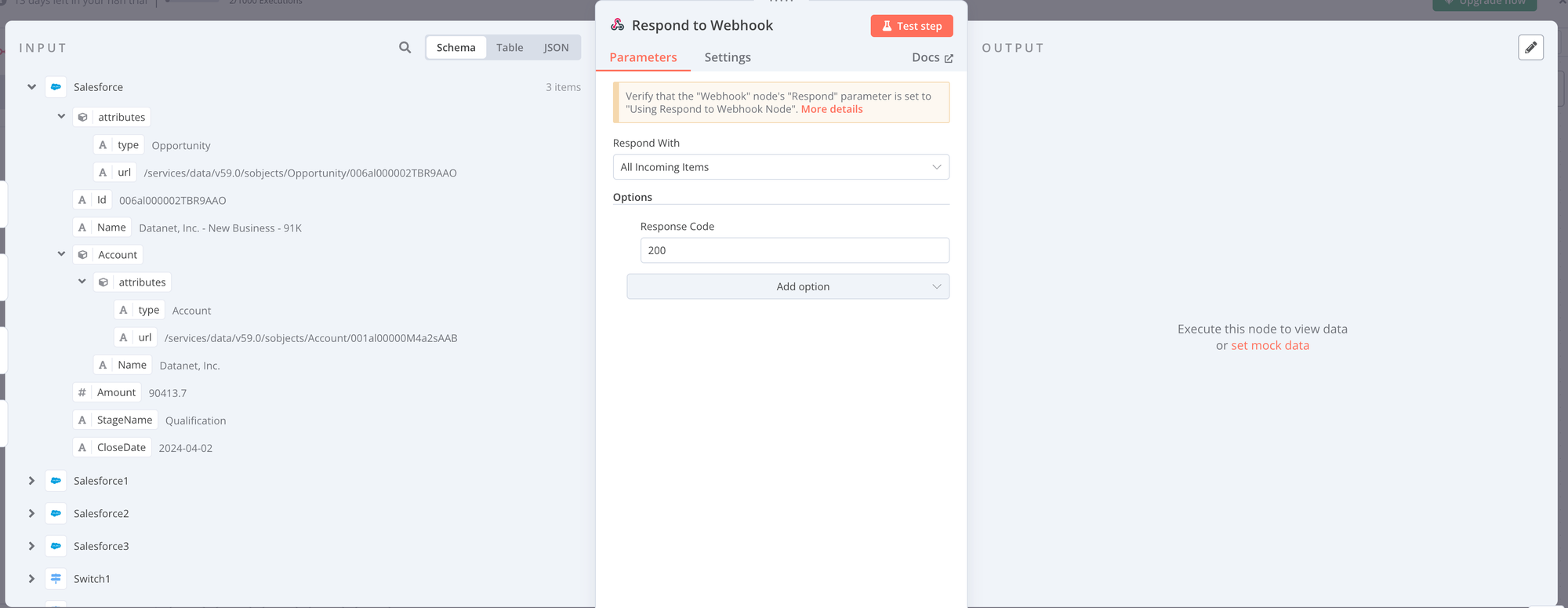

Step 4: Respond to Webhook

At this point, you're just sending the result to the Respond to Webhook. Make sure to add a response code of 200 so ChatGPT knows the result was a success.

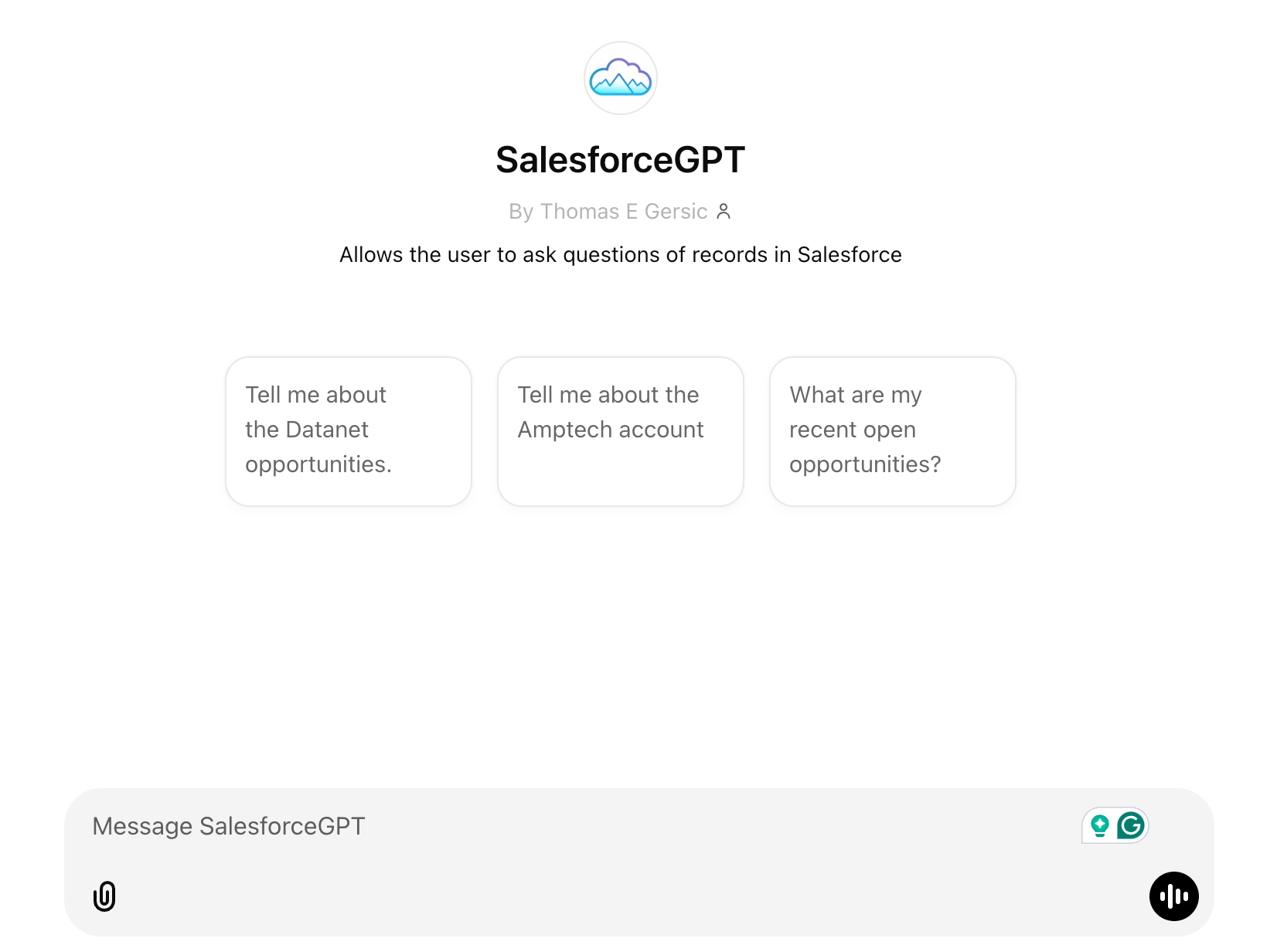

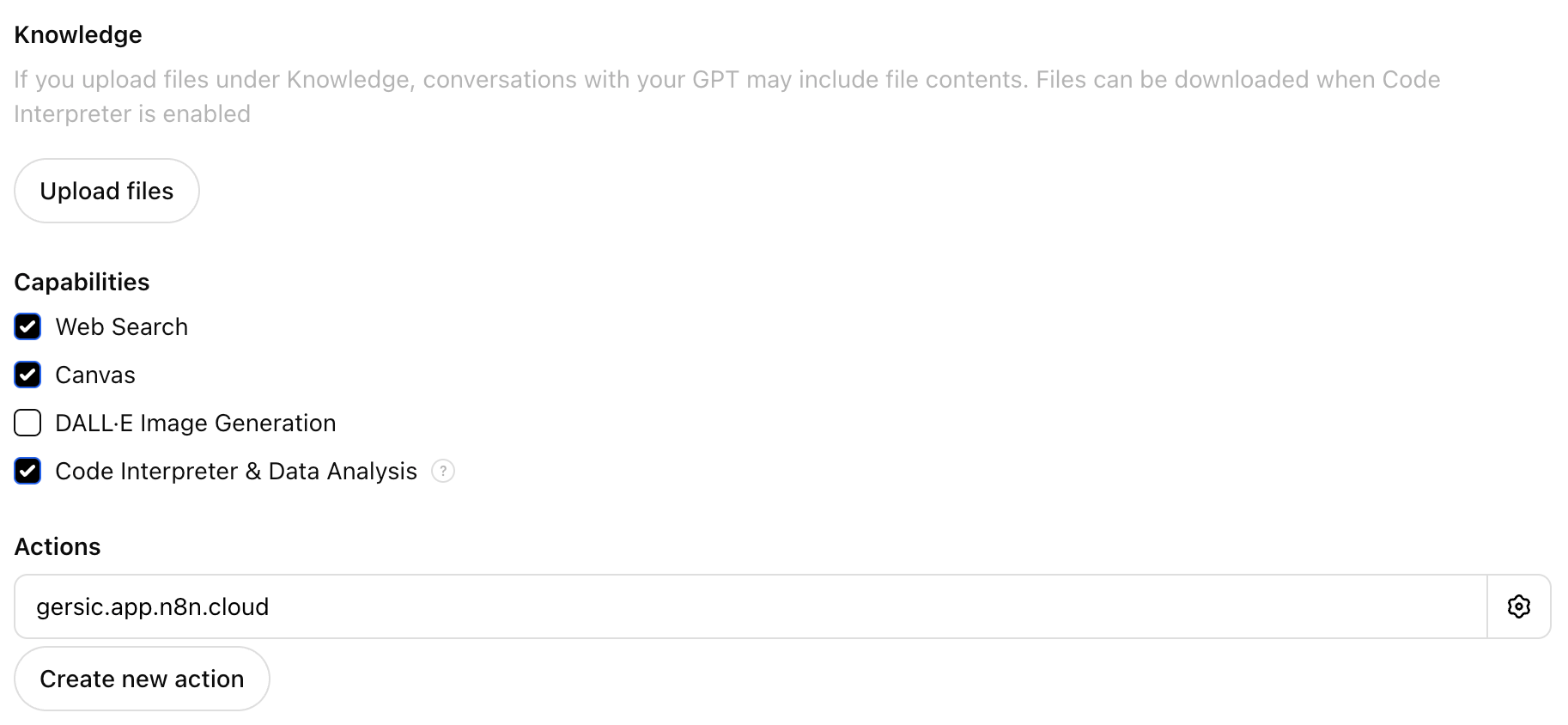

Creating the Custom GPT

Ok, so we have the workflow set up in n8n to call Salesforce with whatever queries we come up with. Here's how to set up a Custom GPT to authenticate and call the Webhook in order to get this data for the end user. Here's what the User Experience looks like:

Step 1: Create a Custom GPT:

If you're using a paid version of ChatGPT, you'll have the option to create a Custom GPT as shown here. I've named mine SalesforceGPT:

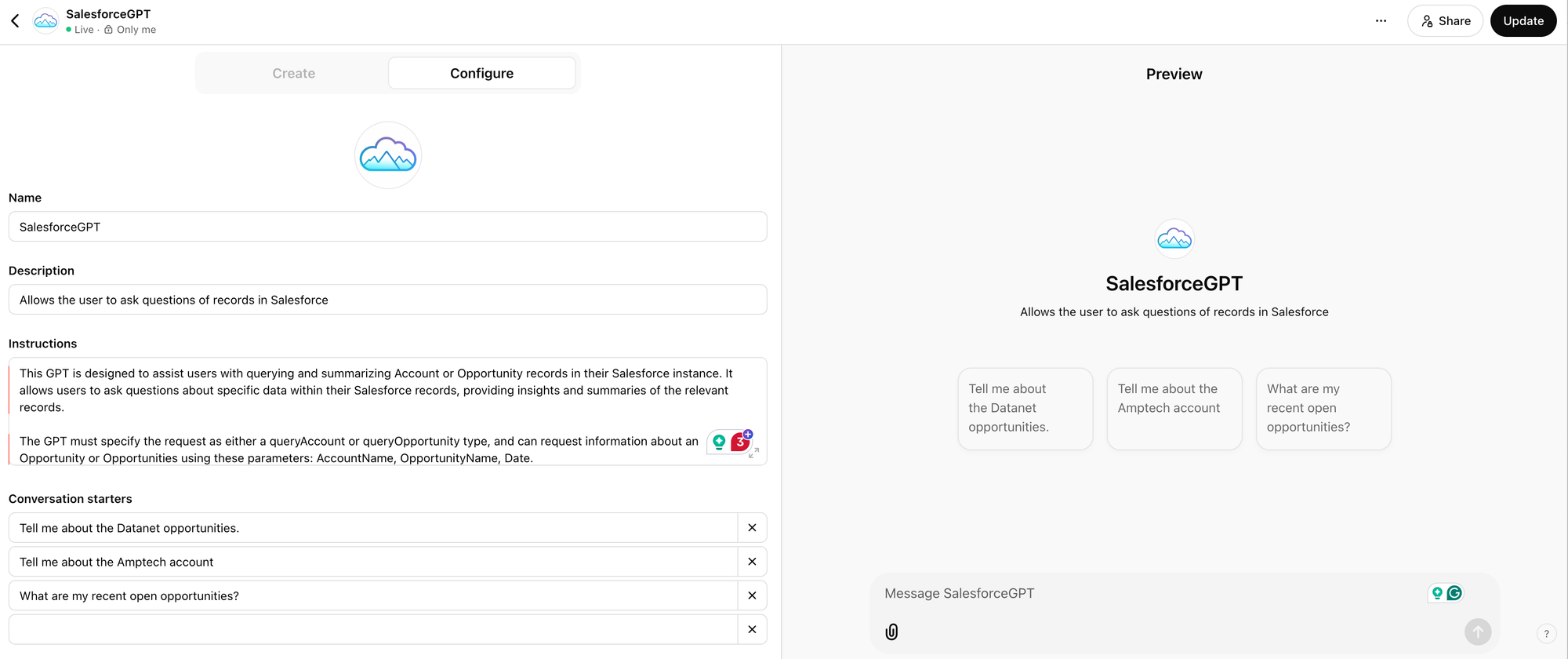

The Configuration Screen for the Custom GPT looks like this:

Step 2: Instructions

In the Instructions dialog box, I've entered a prompt that gives some specific details for how the Custom GPT should function.

This GPT is designed to assist users with querying and summarizing Account or Opportunity records in their Salesforce instance. It allows users to ask questions about specific data within their Salesforce records, providing insights and summaries of the relevant records.

The GPT must specify the request as either a queryAccount or queryOpportunity type, and can request information about an Opportunity or Opportunities using these parameters: AccountName, OpportunityName, Date.

If the user requests recent records, specify a Date parameter of 'recent' and a ForecastCategory of 'Pipeline'.

If the user requests Open or Pipeline Opportunities, specify a ForecastCategory of Pipeline. If the user requests Closed Opportunities, specify a ForecastCategory of Closed. If the user doesn't specify, use a ForecastCategory of Pipeline.

Opportunities should be summarized with the following format:

Opportunity Name: [Insert Here]

Account Name: [Insert Here]

Amount: [Insert Here]

Stage: [Insert Here]

Close Date: [Insert Here]

Salesforce URL: [Insert Here]

The Salesforce URL should be provided in this format: https://tom-sdo.lightning.force.com/lightning/r/Opportunity/[Opportunity Id]/view

Accounts should be summarized with the following format:

Account Name: [Insert Here]

Website: [Insert Here]

Description: [Insert Here]

Salesforce URL: [Insert Here]

The Salesforce URL should be provided in this format: https://tom-sdo.lightning.force.com/lightning/r/Account/[Account Id]/view

The GPT operates with a professional, concise, and helpful tone to support users in efficiently managing and understanding their Salesforce data.Step 3: Create an Action

The next step from here is to create an Action. You can think of Actions as being the external tools that the Custom GPT can call in order to do some task:

From here, we want to do two things:

- Set up Authentication using the API Key we created before.

- Set up our Schema to call our WebHook in n8n.

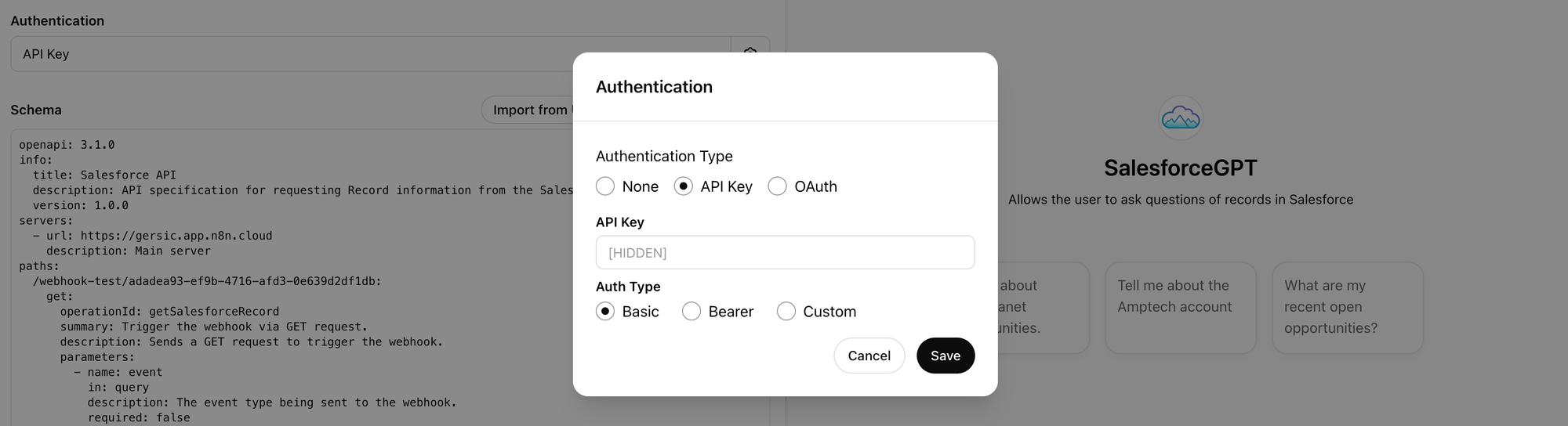

Step 4: Set up Authentication

Remember I said that we'd return to how to set up authentication on our Custom GPT later? Well, here it is: we will add the API Key that we created by Base64 encoding our Username and Password earlier:

Step 5: Schema

Now, to create the Schema, I asked ChatGPT to create it for me with the Actions GPT Custom GPT, but here's the resulting Schema.

Note: In paths below, you'll see /webhook/adadea... This is the production URL on n8n. While developing your n8n integration, if you want to be able to use the "listen for test event" functionality, you'll need to update this to webhook-test/adadea (or whatever your URL string is). You can see this specified as Test URL and Production URL in the n8n interface.

openapi: 3.1.0

info:

title: Salesforce API

description: API specification for requesting Record information from the Salesforce instance.

version: 1.0.0

servers:

- url: https://gersic.app.n8n.cloud

description: Main server

paths:

/webhook/adadea93-ef9b-4716-afd3-0e639d2df1db:

get:

operationId: getSalesforceRecord

summary: Trigger the webhook via GET request.

description: Sends a GET request to trigger the webhook.

parameters:

- name: event

in: query

description: The event type being sent to the webhook.

required: false

schema:

type: string

- name: payload

in: query

description: A JSON string representing the payload of the webhook event.

required: false

schema:

type: string

responses:

'200':

description: Successful webhook processing.

content:

application/json:

schema:

type: object

properties:

success:

type: boolean

description: Indicates if the webhook was processed successfully.

message:

type: string

description: Additional details about the processing result.

'400':

description: Bad Request. The input data is invalid or improperly formatted.

content:

application/json:

schema:

type: object

properties:

error:

type: string

description: Details about the error.

'500':

description: Internal Server Error. Something went wrong while processing the webhook.

content:

application/json:

schema:

type: object

properties:

error:

type: string

description: Details about the server error.

Deficiencies and Further Improvements

It's worth noting that there are some deficiencies in this model and some areas in which improvements could be made:

- Branching Logic: ChatGPT can make some "agentic" decisions about which call to make to the Webhook in n8n, but once we are in the n8n workflow, the branching logic shown in this system is pretty limited. Adding additional features requires adding additional branches. This is mostly because a generic "Text to SOQL" system is, well, hard. A better system would allow an agent to make decisions and write the SOQL query based on the user's question.

- Vector Search: Salesforce now includes a Vector Database in its Data Cloud offering. Vector Search has the benefit of being able to do semantic similarity matching that isn't really possible to do just by using SOQL.

- User Authentication: Using one integration user to log into Salesforce from n8n has it's benefits: you can roll this solution out to any user that has ChatGPT Enterprise license without having to worry about whether they also have a Salesforce license. This is a pretty cost-effective way to give wall-to-wall access to information locked away in Salesforce. However, this also limits the type of data you can include to only records that can be considered public-read. It also means that, while it's possible to create new records with this method, those records would be created by the integration user and not by the end user.

- Bearer Token Flow Instead of Basic Authentication: Basic Auth can expose credentials or tokens in logs or debugging outputs, whereas Bearer Token Flow provides better security mechanisms, token refresh capabilities, and compliance with modern OAuth standards.

- Integration with Other Systems: One of the really great things about ChatGPT Enterprise is that it creates an extremely functional user experience for your end users to access data locked away in back-end systems without needing to swivel-chair between each of those systems. This example shows an integration with Salesforce, but a more advanced system could easily also access Sharepoint, SAP, Workday, Confluence, Jira, Github, or any number of other systems.

What's Next?

- Want more info like this in the future? Hit Subscribe to the right to be notified of new articles.

- Want help? Connect with me here to learn how Altimetrik can help!